Gemini Cloud Assist Investigations:

Solving the "Black Box" Problem in Enterprise AI

0 to 1 Product Design, Agentic Workflows, Probabilistic UX

Staff UX Designer & Strategy Lead

Disclaimer

The following case study is personal and does not necessarily represent Google’s positions, strategies, or opinions. I have omitted and obfuscated confidential information.

Client

Role

Staff UX Designer

Type

Timeframe

Probabilistic UX, Google Cloud Platform, Agentic Workflows

Sep 2023- Present

Summary

This case study chronicles the evolution of Gemini Cloud Assist Investigations—a "0 to 1" initiative I evangelized from a concept deck into a strategic platform pillar. It details the journey of shifting the cloud operations paradigm from "Passive Monitoring" to "Active Reasoning.

Overview

In the high-stakes world of Site Reliability Engineering (SRE), "Mean Time To Resolution" (MTTR) is the governing metric. Historically, cloud tools were passive—excellent at collecting data but offering zero help in reasoning through it. During critical outages, highly skilled engineers were reduced to "human routers," drowning in fragmented signals while the clock ticked.

Strategic Problem

I partnered with Product Leadership to identify the opportunity for Generative AI to solve the "Correlation" problem. I led the design strategy across the entire product lifecycle: securing the executive mandate through an internal roadshow, designing the "Blast Radius" MVP for the Google Cloud Next '24 Keynote, and architecting the "Glass Box" trust model to enable safe enterprise adoption.

Action

Strategic & Cultural Transformation: I transformed a grassroots pitch into a core strategic pillar for Google Cloud, earning the Cloud Technical Impact and PSX Trailblazer Awards. This initiative not only defined a new product category but fundamentally shifted the engineering culture from "Deterministic UI" to "Probabilistic UX," establishing the architectural foundation for the agentic era.

Impact

The Origin

Friction

In 2022, the daily reality for an SRE was a battle against fragmentation. During a critical outage, users weren't struggling to find data; they were drowning in it. They were forced to act as "human routers," mentally stitching together signals from logs, dashboards, and slack threads. The tools were passive; the cognitive load was entirely on the human.

The Spark

The rise of Generative AI presented a "0 to 1" moment. During a collaborative session with a Product Lead, we realized that while LLMs were being hyped for code generation, their true potential in Observability was correlation. What if we could stop asking the user to search for the needle, and instead build a system that brought the haystack to them?

The Campaign

This wasn't a roadmap request; it was an act of intrapreneurship. We knew a standard spec wouldn't sell this vision—it was too abstract.

The Artifact: We bypassed process and created a "North Star" vision deck telling the story of an AI-assisted troubleshooter.

The Roadshow: We pitched Senior Directors not on a "chatbot," but on a new product category.

Mandate

The narrative resonated. We secured dedicated headcount, funding, and a mandate to deliver the flagship demo for the Google Cloud Next '24 Keynote.

The MVP

Situational Awareness (The "Vision Demo")

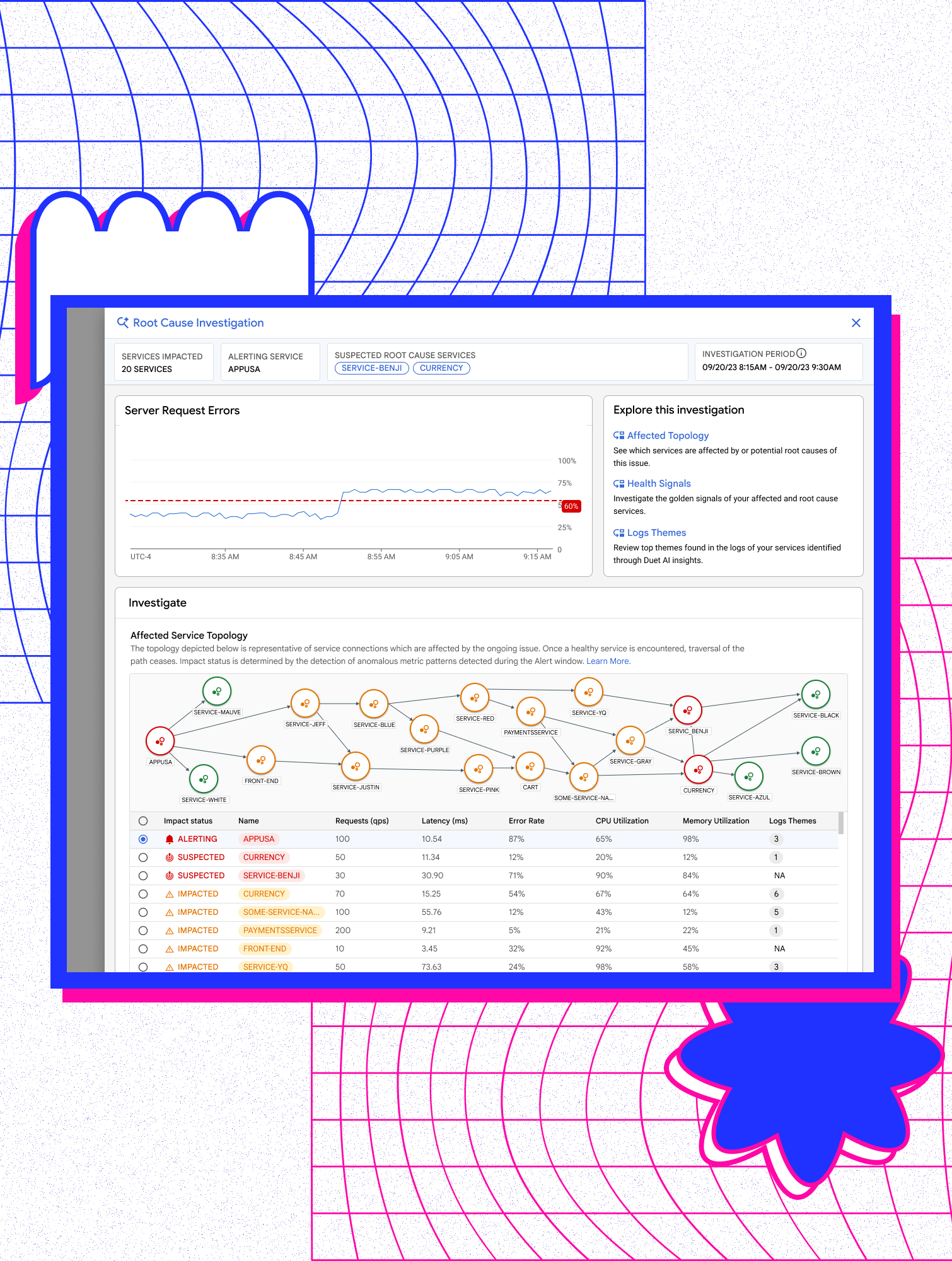

Before an AI can "fix" a problem, it must prove it can "see" it. For the MVP debut, we focused on high-value, deterministic signals to help engineers triage issues instantly.

Design Patterns:

Contextual Topology: I moved the experience beyond list views to visualize the Blast Radius of failure. I designed a network map that instantly highlighted which upstream and downstream services were impacted by an alert.

Health Signal Correlation: Instead of forcing users to check five different dashboards, we aggregated "Red Status" signals across the stack into a single view.

Log Themes: We applied LLMs to group similar logs and translate cryptic error messages into plain English, allowing developers to analyze massive volumes of log data in a fraction of the time.

Outcome

This scope defined the demo for the Cloud Next '24 Keynote. It validated the market demand for AI in Ops, shifting our success metric from "Query Speed" to "Insight Speed."

Evolution & Glass Box Architecture

A Pivot

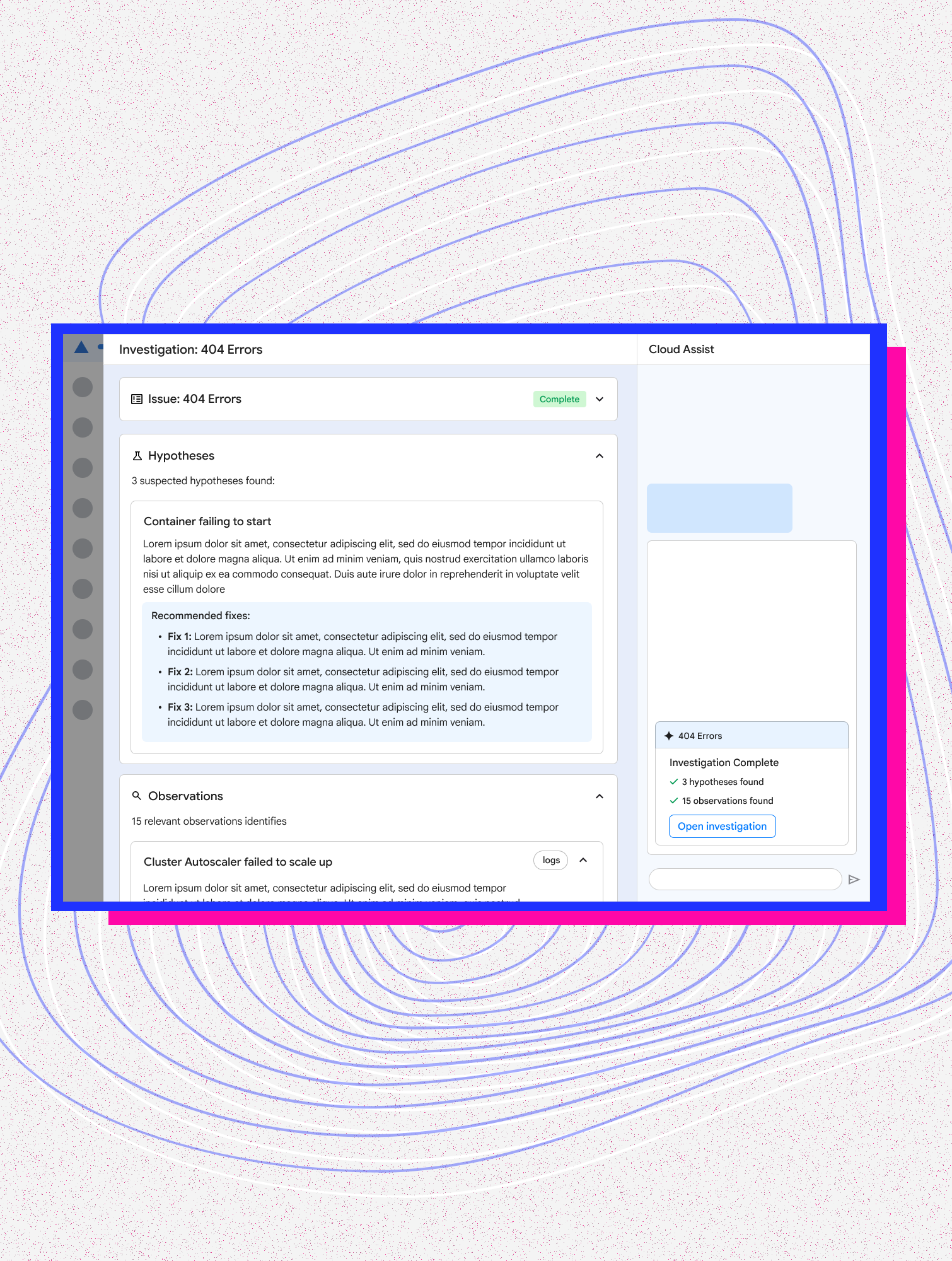

After Next ‘24, we recognized the MVP was too focused on the "Happy Path." To truly reduce MTTR, the system needed to move beyond summarizing data to generating hypotheses. This introduced a massive trust barrier: How do you help an expert SRE trust a machine’s "best guess"?

The Architecture of Trust

My strategy was to avoid a single, high-stakes "Magic Answer." Instead, I architected the "Glass Box" model—an interaction pattern that treats the AI as a collaborator, not an Oracle.

Principle A: Probable Root Cause Instead of one conclusion, the system generates ranked theories (e.g., Hypothesis A: Database Connection Pool Exhaustion), acknowledging the ambiguity of the situation. This empowers the expert user, turning the AI into a collaborator that surfaces possibilities rather than dictating facts.

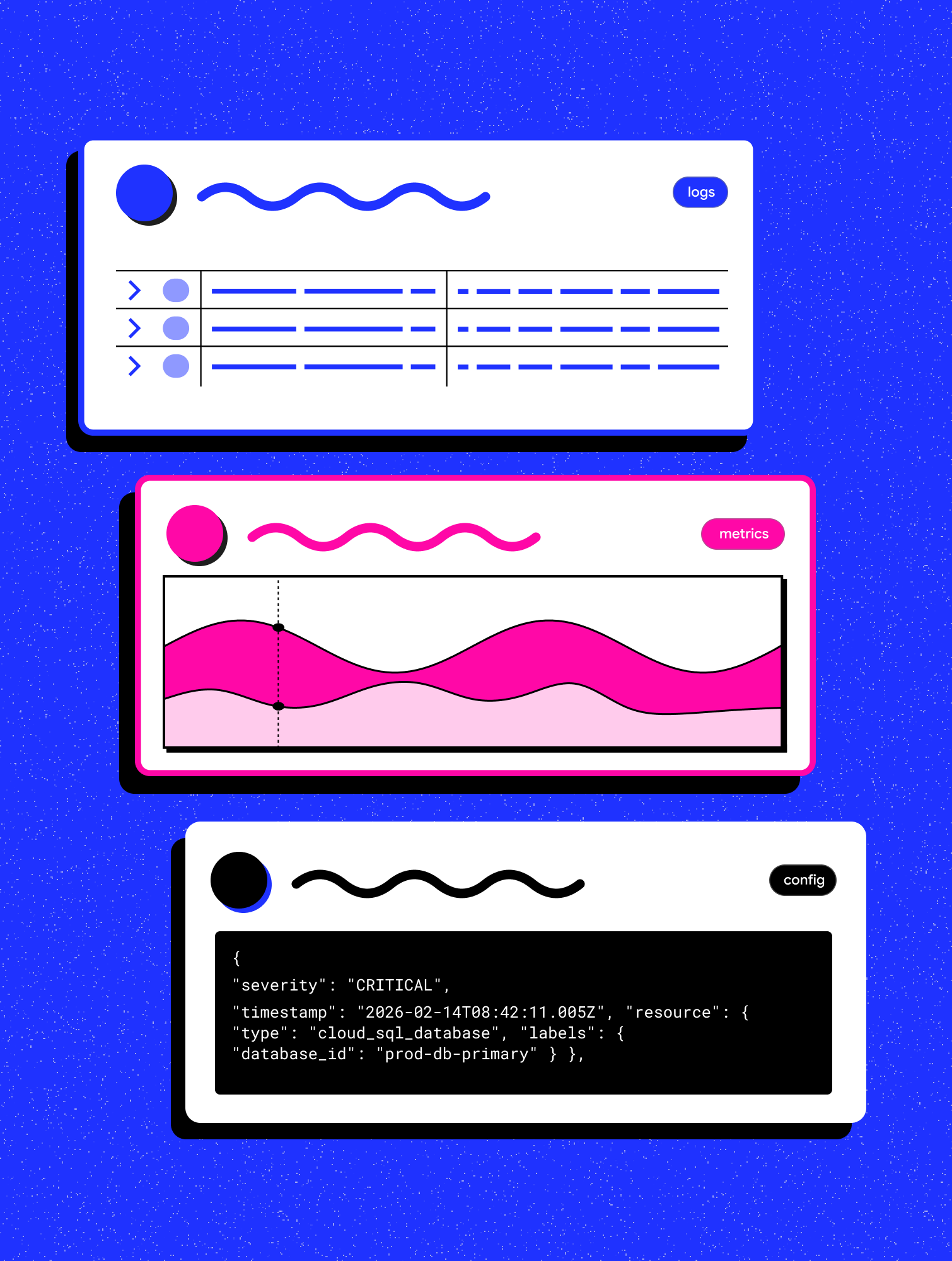

Principle B: Evidence Anchoring (The "Observations" Pattern) I implemented a strict provenance layer that became the core feature known as "Observations."

The Pattern: Every AI insight is anchored to verifiable telemetry. Observations combine a natural language summary with direct deep-links to the specific Log Snippets, Metric Spikes, or Config Changes that support the theory.

The Result: This transforms the interaction from blind trust to verified reasoning. The user doesn't have to trust the AI; they can trust the evidence.

The Vision

Agentic Readiness

As Google pivots toward the "Agentic Era," the role of the human operator will shift from Doer to Supervisor. The scope of my work has expanded to ensure our troubleshooting tools are ready for this new paradigm.

I am currently leading the definition of the design standards that will govern these future interactions—focusing on the "Human-on-the-Loop" protocols required to safely delegate authority to an AI in a zero-tolerance infrastructure environment.

Impact

Strategic & Cultural Transformation

I transformed a grassroots pitch into a core strategic pillar for Google Cloud, earning the Cloud Technical Impact and PSX Trailblazer Awards. This initiative not only defined a new product category but fundamentally shifted the engineering culture from "Deterministic UI" to "Probabilistic UX," establishing the architectural foundation for the agentic era.

Learn more about Gemini Cloud Assist.

What people are saying…

Engineering Director O11Y

“Our success at Google Cloud Next can be directly attributed to Ashley's thoughtful and innovative designs that incorporated & balanced UXR feedback, product and engineering requirements effectively. Thank you Ashley for all your hardwork, leading the team through several key pivots and always maintaining a can-do positive attitude!”

VP Google Cloud Platform